Week two is in the bag! Monday, the learning curve and intensity level increased. We engaged in a conversation about how we could tie our proposed lesson plan to our “main” objective. At this point, research is in full swing. I am constantly brainstorming a possible lesson to create. I have a great idea. I just have to refine it for the classroom.

I immersed myself deeper into computer vision. I began to understand the programming behind it and the power of Computer Vision. As a task, I demonstrated how to output an image from my computer’s webcam using OpenCV; I displayed images with a bounding box depicting the face and eyes in an image. I also made a copy of a particular image from a file using OpenCV.

Working with Python to this degree has increased my skill level. Daily, I am reimaging how I could take the lessons learned and incorporating them into my CS curriculum.

Tuesday, I read an article explaining Mask R-CNN. Simply put, it can detect each instance in an image while separating them from others in the same image extremely fast. This technique would be very beneficial when searching for specific criteria within hundreds or thousands of images.

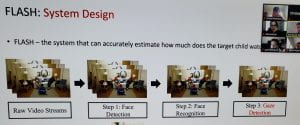

Our guest speaker for this week was Amil. Amil is a graduate student at Rice University. He is currently working in the field of Face and Gaze detection within children. The system he used is called FLASH – (Family Level Assessment of Screen use at Home). An Initial RAW video feed was played for the child. The system has three (3) steps:

- Face Detection

- Face Recognition

- Gaze Detection

The system can accurately estimate how much does the “target” child watches the video feed. This study could have far-reaching applications for schools and teachers. Teachers are using computers and other devices with screens more and more due to online learning. This system could help teachers know whether or not a student is paying attention to the lessons on their computer screen.

Wednesday, hump day! Today we had a great discussion about topics and ideas for our “Teach Engineering” lesson plan. We are gathering more and more insight as to what we could expect when we dive into creating our lesson. That led us to a talk and Q & A session with Jimmy Newland.

Jimmy Newland is a past participant of the PATHS-UP program. He took us through the steps necessary to take our lesson plan idea from conception to reality. He walked us through his published lesson. His lesson, “Visualize your Heartbeat,” was very creative, intuitive, and engaging.

Thursday, we talked with Mary Jim. We discussed the article Computer Vision and Machine Learning.“Appearance_Based Gaze Estimation in the Wild” by Xucon Zhang, Yusuke Sugano, Mario Fritz, and Andreas Bulling. The article discussed the different methods used to monitor test subjects while looking at objects on their computer screen. The test subject were in varying lighting conditions; various times of the day; various types of seating conditions. The viewed over 213,000 images before publishing their results.