This week has been short, time-wise; which made it fast and furious! Monday, I continue working on programming assignments and the required readings. I am still relatively new to programming. Although I wasn’t formally trained in CS (earning a degree in CS) I love everything I have discovered and learned thus far about programming. With that said, I was not aware of “Kaggle”. Kaggle is the world’s largest data science community with powerful tools and research resources to help programmers achieve their data science goals.

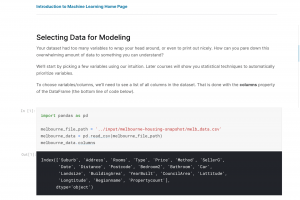

I started with a tutorial to help me understand Kaggle’s environment. The tutorial showed me how to import significant bytes of data. The tutorial tested my ability to read a data file and understand specific statistics about data. I applied techniques to filter data that helped me build a Data model. The last challenge I had to accomplish was to construct my very own data model! Data mining is new to me; I can undoubtedly see the benefits, applications, and the power behind learning this new skill.

I can see using this tool in my CS classrooms. The Jupyter notebook interface encompasses everything you would need to get started working with data. The notebook environment that contained the code was very intuitive and easy to use. By the end of the tutorial, I created my own Data model. Developing the skill set to import, disaggregate, manipulate, and that use “data” to make plausible predictions is invaluable!

Before I became an educator, I was a Yield Manager for my last employer. I distinctly remember working with warehoused data year over year to forecast current and future pricing models. I used Mircosoft Excel pivot tables and filtering software; which was not that flexible. Machine Learning would have made a significant difference in building, disaggregating, and using that data for my forecasting models!

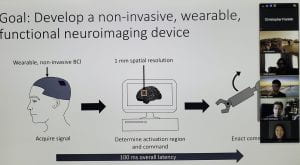

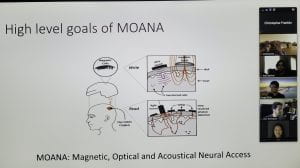

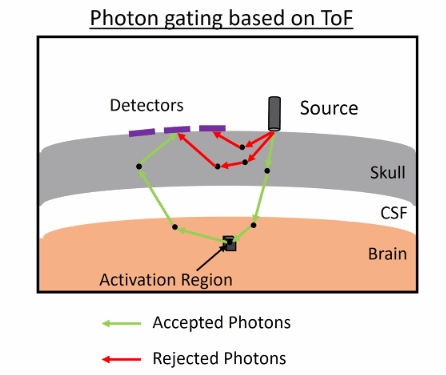

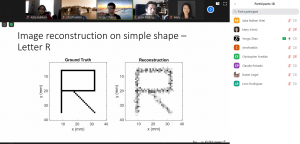

Each week we have guest speakers; whether they are in graduate school or working in the Computer Science industry. This week was no exception. Yongyi Zhao is a graduate student at Rice University. He is currently working on a device that will someday (hopefully) be able to read “Brain Waves”. His research and testing are fascinating. He is working on taking a human “thought” and re-creating it as a digital image. The applications for such a device are endless! From hospitals to education to individuals; the research and work that is taking place is astounding! Below is a prototype wearable device that could be worn unobtrusively (still in development). Image 1

The MOANA project (Magnetic, Optical and Acoustical Neural Access) is a device that would be able to read and write brian waves and turning that information into text or images. This is “Mind Blowing” research! Some of the students at Rice are working on projects that could someday “change” the World!

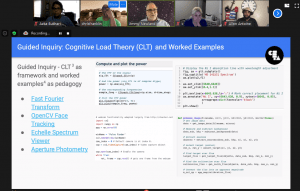

The SWITCH PATHS-UP program is the fifth program I signed up for and was accepted. Each encounter raises the bar higher and higher. I as well as my students and co-workers benefited from all of the previous programs I attended.

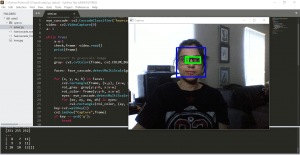

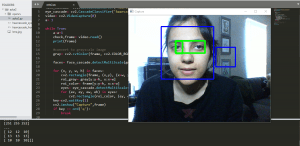

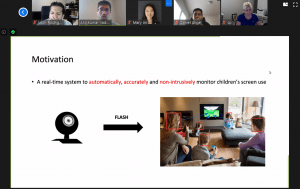

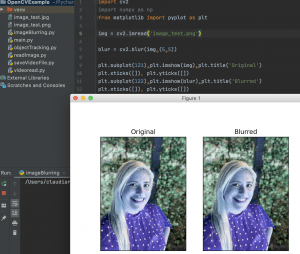

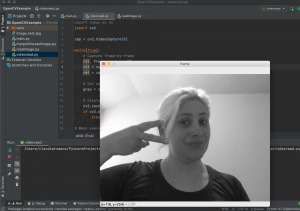

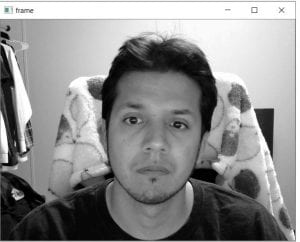

This program by far is the most applicable to my current assignment. My students, current and future are going to be amazed at this new level of excitement that these new tools will bring to my Computer Science classroom. [Computer Vision (with Open-CV), Artificial Intelligence, Machine Learning, and all its capabilities, working with significant amounts of data through Kaggle just to name a few].